Artificial Intelligence

This article is meant as an introduction into Artificial Intelligence (AI).

1) What is AI?

The term “Artificial Intelligence” was coined by John McCarthy in 1955. This research field tries to simulate a lot of human abilities like perception in general (for example: seeing, smelling and hearing) but also aspects like thinking and deciding.

2) Why is AI an interesting topic?

To understand the importance and attraction of AI read the following two passages.

2.1) AI – Today

Today a lot of software enables intelligent behaviour in some ways. In 1997 for the first time a chess master (Garry Kasparov) was beaten by a computer. You can run software like that on an average smartphone today, without even having the chance to win against it.

The amount of information is rising every day and humans have to cope with it. Consider for example the amount of subject matter children have to learn in school nowadays.

In addition the whole society deals more often with things like the Internet, Software, Hardware,Smartphones and Facebook. It comes closer together and becomes an interconnected society. To understand and to work with these trends a lot of knowledge is needed. That is why information technology has become the present-day Swiss Army Knife. Great databases with millions of datasets are favored over tons of cumbersome paper.

So the intelligent era has already begun. Writing information on paper or on a Hard Drive doesn’t solve problems, instead Artificial Intelligence does! Intelligent software that saves us a lot of time that we need for doing other things in our life.

2.2) AI – In our near future

(c) AIvoke

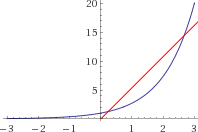

There are many critics out there who say that we won’t be able to master advanced technologies of AI in the next decades. Raymond Kurzweil was able to convince me of the opposite with his book The Singularity Is Near. He emphasized the argument that human beings are only capable of thinking in linear manner, but technology develops in exponential speed (left image: the blue exponential line transcends the red line). Arising benefits of AI will continue to take complexity from our life. That is why Artificial Intelligence seems to be an attractive way to go into a promising future.

3) Limits of AI

From my point of view this is one of the most interesting topics when it comes to AI. Imagine someday the secrets of our brains will be revealed and the human species will be able to simulate them on ultra fast computers. This unlocks advanced Artificial Intelligence (because it works in the same way like our brain does). Furthermore this software will be millions of times faster than our brains (because electricity can flow thousands times faster in silicon than in biological neural cells). Please read Risks and benefits of AGI for more information about Artificial General Intelligence.

The interesting point here is that this software would be able to improve and change its own structure. It could improve its own intelligence in a speed we wouldn’t be able to keep up. This is a recursive effect: the improved software can continue to improve itself at this point and so on. The possibilities of this technique are unimaginable but you can say it is the last invention mankind needs to make. Any problems on this planet like diseases, hunger and political disorder could be solved in a way that all humans together couldn’t do better.

4) Singularity

(c) Casey Reed/NASA

Having Artificial General Intelligence (AGI) offers a lot of risks. One possibility to minimize this risk is to merge with this kind of technology.

For more read The Singularity.

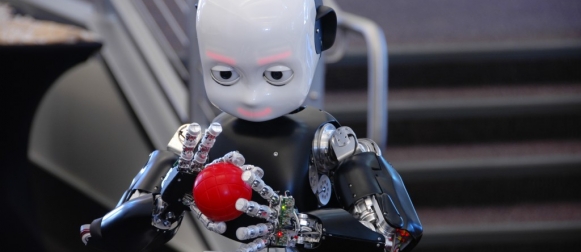

5) AI – Research Today

The current situation and research concerning AI is mostly dealing with limited topics in the great field of AI. This is also referred to as “narrow AI” or “weak AI”. For example Google is researching the Visual intelligence. In 2012 they were able to create software that was able to decide whether you can see a cat on an image or not. Other fields like speech recognition can be seen in software like Apples Siri. You talk and the program “understands” what you want to say. For each area of human perception there are attempts to imitate them. Nevertheless there is no general intelligence program like our brain that can concentrate all of them together in one entity, but there are atleast some ambitious projects to research AGI. Finally one interesting area is robotics: see State of the art robotics for more.

Unknown

January 20, 2013 at 22:50A rocket with 80% of the parts of a full rocket is huge step up from nothing, and takes a lot of effort, but it's still either going to fizzle or explode. You need 99% of the components to get a reasonable chance of success (and still rockets explode from time to time).I'm suggesting a threshold effect here, where you literally cannot help by building an AGI without haven't solved the problem of transferring human morality to an intelligent system.In this situation I would not suggest we stand idle while things go wrong. I suggest furious effort towards the parts of the problem that need to be solved first. This is something akin to making the CEV proposal rigorous, or replacing it with something that works. Figuring out how to implement powerful intelligence is important too (actually a special case of the previous problem), but it's too early to start fueling up the rocket and planning for manned launches.There are additional risks with getting close, but not quite close enough and taking humanity to a bad place. 80% is a pretty huge protibaliby to be assigning to AGI safety. That's the kind of safety protibaliby I'd expect from a full FAI design that looks absolutely certain to work, that'd I'd verified from 10 different angles and established a machine-verified proof of correctness for. There's still a chance you've fundamentally screwed things up in an invisible way, even taking into account your layers of fundamental error correction mechanisms. If you're this certain I'd be very interested to know the details.If someone with a less safe design was literally just about to push the launch button, that is one question.In any other situation, it strikes me that there are fundamental FAI problems which if you don't solve all of them your protibaliby of safety is > 0.1%. That is, even if you've understood a huge amount more than everyone else, your protibaliby of safety might be equally tiny.

Something went wrong with this comment so i restored it. (Sent by browser Opera 8.01) - Ruslan H.